Understanding the Why and How of 5G NB-IoT NTN Capacity

In this webinar you will discover:

- Why is it important to understand 5G NB-IoT NTN capacity?

- Can NB-IoT work for NTN? Headlines from the analysis we have delivered to ESA.

- What are the elements of 5G NB-IoT NTN system capacity?

- How do you calculate capacity?

- What is the achievable capacity for NGSO and GEO based example scenarios?

- How realistic and precise are capacity calculations?

Presenters: Research Engineer, René Brandborg Sørensen and Sales Executive, Juline Hilsch.

Register now and watch at your leisure.

On demand

FAQ

Are you expecting to support multicast by satellite for 5G IoT devices?

Yes as a future feature. The roadmap for our 5G NB-IoT waveform is prioritised according to our customer needs.

What is the capacity of a multicast system?

The capacity of a NB-IoT cell relying on multicast depends on: (1) The MCS of the broadcasting channel, which gives a bit-rate/transmission time for broadcasts and a SNR requirement for allowing decoding of the broadcast, (2) The satellite configuration and resulting link-budget, which fixes the coverage area where the required SNR for the broadcast can be achieved and in turn the number of UEs that can receive the broadcast.

What sort of connection intervals can be supported?

Inter arrival time can be traded-off for the number of supported UEs. In our framework we calculate the capacity in terms of procedural exchanges that can be supported per second for a given type of traffic. So if 50 exchanges of a DoNAS transmission can be supported by second at the system level, that could either be 300 UEs completing a DoNAS exchange every 6th second or 30000 UEs completing a DoNAS exchange every 30 minutes.

How precise is the capacity modelling compared to simulations?

The precision of both analytical modelling and simulation depends on the “models” used for either. A monte-carlo simulation of the NTN protocol and environment is probably the next best candidate realism next to experimental validation IF the simulator takes all the elements of the protocol into account. However, such a simulator takes a long time to develop and it takes a long time (computationally heavy) to run simulations. On the other hand, an analytical framework and models, like our feasibility study, takes less development time and runs very quickly. Currently, to our knowledge, there is no NTN NB-IoT simulator that can give the same KPIs as our analysis for a comparison, and we view our results as good indications or approximations of the performance.

What is the typical timeline for conducting a capacity modelling?

A capacity modelling can take a few weeks to a few months depending on the scope in terms of scenarios to investigate and features that are required to be modelled.

What would the capacity for my satellite system be?

Capacity for a system is given by the capacity of the individual stages in our framework: Paging, Random access and procedural signalling+data exchanges. The capacity of a given satellite configuration, accompanying RAN configuration and fading environment is exactly what GateHouse can approximate well with our feasibility study.

What are the challenges of deploying a GEO constellation compared to a LEO constellation?

In terms of constellations, only a few GSO satellites are required for global coverage whereas LEO requires a swarm – of course LEO can also provide discontinuous global coverage with just a single satellite in polar orbit. The Doppler and delay variations are a challenge in LEO at the link-level and at the system-level, there is the challenge of tracking UEs in tracking-areas (TAs), which in conventional cellular are coupled to specific base stations. In GSO, the delay is a challenge along with the path-loss due to the distance to earth.

What is the main limitation on the transceiver on the satellite in term of power consumption?

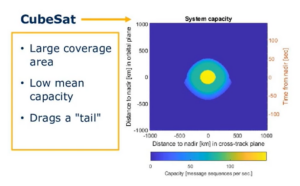

The OFDM transmission scheme has a low power-amplifier efficiency so a satellite, which is already limited in it’s estate for solar panels and power budget, will have to burn a good amount of energy in the amplification process. This is less of a problem in GEO satellites where a higher power budget can be attained, and it is a larger issue with for example a CubeSat. However, CubeSats experience less path-loss due to the closer orbits in comparison to satellites in GSO – on the other hand the lifetime in LEO is only a few years. A CubeSat can provide an NB-IoT RAN at 4W output power, but of course with limited capacity and coverage, but it entirely depends on the satellite configuration and service scenario.

Are you assuming the GSO system having several simultaneous gNB cells (one in each beam?)

This is not an assumption in our framework for analysis, but in general our understanding is that a GSO would want to provide individual cellular service within each beam to increase spectral and power efficiency. In the bent-pibe architecture this requires a wide feeder-link to be sliced and shifted in frequency for each beam or some other efficient encapsulation of the RAN in the feeder link.

Will there be any change in maximum coupling loss as the link budget increases?

Yes, the MCL is defined as a linear function of the link budget. As the distance to the satellite increases so does the satellite lifetime and the cost to launch the satellite into orbit. Thus, it is natural to assume that satellites launched to greater distances are equipped more expensively with a larger power budget for the RAN and antenna dishes which are more directive and have larger aperture sizes, which limits noise.

What is a typical latency for a single LEO satellite (i.e. not a large constellation)?

The latency in LEO can range from 2 to 20 millisecs depending on the orbital height and the elevation angle. The revisit time in LEO depends again on the orbit height and inclination along with the RAN coverage area. This can vary between ~90min to a 12hrs depending on the parameters with a communication window (visibility window) of 20-200 seconds.

How quickly does a user switch between one satellite and the next?

The switch between one satellite and another could be as fast as in terrestrial networks. UEs may trigger radio link failure (RLF) and re-select a cell. Should the UE “see” another cell with better link conditions and report it to the eNB, the eNB may initiate a handover as in conventional cellular networks taking – for example hundreds of milliseconds in LEO. Of course this could be problematic if Extended Coverage (EC) UEs are allowed in the cell and a UE attempts a handover at something like 64 repetitions, which could take many seconds to complete in a narrow communication/visibility window.

What are typical turnaround delays in both CubeSat and GSO cases?

Say a procedural exchange involves transmitting 6 one-way messages back and forth in the MO scenario with 1 additional message (paging) in the MT case. The delay in LEO is 2-30 ms and 120 ms in the GOS case. That is a total propagation delay of 12-180 ms for LEO and 720 ms for GEO. Additionally the time required for the transmissions – the time on air (TOA) – will depend on the size of the messages and the SNR conditions during the exchange. A quick estimate would be 6 ms ToA in a perfect scenario and 6 msgs x4 RUs x16 reps x32 RU duration (3.75kHz) = 12288 ms in a worst-case scenario. An additional overhead would stem from the transmission of the random access preamble (RAP) and any paging.

How devices are priotized?

In our framework devices do not have a priority – instead we calculate the required amount of resources for a procedural exchange and then we calculate – given a specific RAN configuration – how many such resource allocations can be fitted per second. We then assume an ‘overhead’ from the scheduler being inefficient.

What is causing the trailing of capacity in a LEO cell? And why doesn’t the same thing happen when UE’s are on the leading edge of the moving cell and also consume higher level of channel resources?

As a LEO satellite approaches a UE, the UE must first detect the synchronization signals in the DL and synchronize to the cell before it can initiate a procedural exchange and take up resources within the cell. Since the satellite is moving towards the UE, once the UE is able to synchronize to the cell it will find itself in good link conditions within a few milliseconds and so UEs that begin a procedural exchange as soon as the are synchronized will mostly be in good conditions for the exchange. As the satellite moves away from the UE, we assume that the UE can maintain synchronicity – perhaps utilizing SIB31 for compensation – but if it initiates a procedural exchange now, then the link conditions deteriorate further during the procedural exchange. This latter case is what is creating the trailing of capacity in the LEO case.

Apart from NB-IoT, do you also expect in long-term higher data rates MTC over satellite? E.g. Rel. 17 feature RedCap also over NTN?

NB-IoT is a straightforward solution for NTN because it allows for coverage at a low SNR with a very small amount of signaling overhead and when NTN is rolled out, NB-IoT and eMTC will have an advantage due to the discontinuous coverage scenario – i.e. they are designed to work in NTN with only a few satellites present. eMTC may be be the first next-step up for NTN for MTC in terms of features and data-rates, but RedCap NR devices are also likely contenders for IoT over NTN. However, the NR rollout requires a larger satellite constellation with continuous coverage. On the other hand, NR NTN has a big push in its favor from future cellular handsets integrating NTN capabilities at a low cost – since they already are equipped with a GNSS, most of the required changes could be made with a firmware update and an antenna adjustment. So satellite/constellation rollout for NR NTN could end-up being very fast.

What are the differences between LoRaWAN And NB-IoT?

NB-IoT is a LPWAN often compared to LoRaWAN. In general, LoRaWAN is limited in terms of possible QoS and the number of supported devices in comparison to NB-IoT, but LoRaWAN operates in unlicensed spectrum, which can reduce costs. In an NTN context: (1) the LoRa modulation would suffer at the long distances where only the very high spreading factors would work (without excessive tx power requirements) resulting in a low bit-rate and (2) the large coverage area of NTN cell means that possibly a large number of UEs would reside within the cell and here the ‘Aloha’ access mechanism of LoRaWAN is a severe limiting factor on the scalability – especially in tandem with low throughput (very long temporally) transmissions that are more likely to collide. On the plus-side, the LoRa modulation allows for direct Doppler compensation – no need for a SIB31.

Could delay be an issue in both a GSO and LEO constellation?

For delay-tolerant applications, the propagation delay is not an issue but for near-real time applications it could indeed be – control, alarms, etc. LEO accommodating as low as 40-100 ms exchanges and GSO in the order of seconds. The main difference for delay-tolerant applications with regards to the distance of the satellite would of course be the path loss and everything that follows in the satellite configuration to have an accommodating MCL.

What is the difference between gNB and eNB?

eNB and gNB is the terminology for base-stations in 4G and 5G, respectively. NB-IoT is a 4G-based technology and was developed in 4(½)G – it does however fulfill the requirements for the 5G mMTC (massive machine type communications) scenario, which is why 3GPP decided to use NB-IoT (and eMTC) as technologies for 5G. So NB-IoT is BOTH 4G and 5G, which is why you will often find both eNB and gNB used in the context of NTN IoT. NB-IoT does interoperate with the 4G backhaul – Evolved Packet Core – from that perspective eNB should be the correct terminology.